Online retail has become incredibly competitive and unsafe, and is under constant assault by the Internet underbelly of nefarious online actors, including big industry competitors. These threat constituencies are leveraging bad bots in numerous ways that hurt online retailers.

Bad bots scrape prices and product data, perform click fraud, and endanger the overall security of e-commerce websites, customer loyalty, and brand reputation. Of all bad bot threats, price scraping and product data scraping are the most rampant and costly to online retailers.

An industry built to scrape pricing and product data

Online retailers have spent years and millions of dollars establishing their brand presence online and garnering a loyal following of customers. These customers represent the lifeblood of the business. Yet, a large and growing pool of online competitors are working hard each day to steal away these customers and permanently win their business.

These bad actors seek to scrape information from legitimate online retail sites to gain product and pricing intelligence that can be used to undercut their pricing or position against their offerings. Whether termed ‘price scrapers’, ‘pricing bots’, or ‘pricing intelligence solutions’, an entire industry has grown around the use of automated bots dedicated to scraping as much data as possible from online retailers’ websites.

These are the five most common types of data scraping activities online retailers can expect to find on their websites:

- Price Scraping – Bots target the pricing section of a site and scrape pricing information to share with online competitors.

- Product Matching – Bots collect and aggregate hundreds, or thousands, of data points from a retail site in order to make exact matches against a retailer’s wide variety of products.

- Product Variation Tracking – Bots scrape product data to a level that accounts for multiple variants within a product or product line, such as color, cut and size.

- Product Availability Targeting – Bots scrape product availability data to enable competitive positioning against an online retailer’s products based on inventory level and availability.

- Continuous Data Refresh – Bots visit the same online retail site on a regular basis so that buyers of the scraped data can react to changes made by the targeted retail site.

Preventing price scraping bots can be difficult

There are several reasons for this. First, the nature of the industry makes identifying and prosecuting those who launch bots extremely difficult, time-consuming and expensive. Bots can originate from practically any location in the world and most often originate from well-known hosting providers and networks that organizations trust. Second, the highly illegal bots frequently originate from international locations, where US laws provide little or no recourse once bot originators are identified. Third, most bot technologies are not even considered criminal. In fact, vendors that offer price scraping solutions publicly tout the names of large, legitimate businesses as their customers.

As more vendors compete to offer increasingly sophisticated price scraping and product data scraping products, an arms race of capabilities is taking place. For online retailers, this means a drastic rise in the need for a holistic approach to bot defense as sophisticated and adaptable as the threat itself.

The how and why of price scraping from ‘pricing intelligence solutions’

Termed ‘pricing intelligence solutions’, these products are bringing advanced features and higher levels of sophistication to attacks on retailer websites. Built atop traditional price scraping functionality, they incorporate additional elements to steal and use victims retail site data in the most scalable and damaging manner possible. Here how their price scraping techniques work and what they’re after:

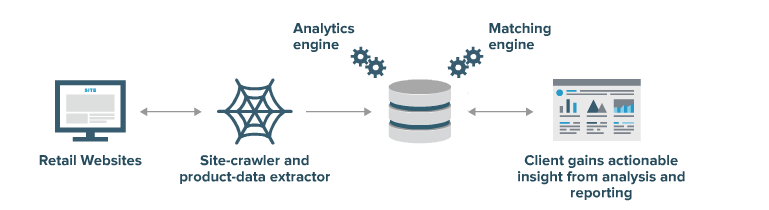

- Crawling: Ever-changing and custom built bots crawl a retailer’s site. Many hit the site each day, targeting the product pages specifically and scanning every product.

- Morphing: Bots take the form of data extractors that morph in line with changes to a retailer’s site. This way, bots can attack a site, even if the retailer adds complexity.

- Matching: Bots collect thousands of pieces of information to make exact matches against a retailer’s products. They lift pricing, inventory and availability data from the shopping cart and other site locations.

- Analytics: Leveraging semantic analysis and data mining, analytics engines sift through the data scraped by bots and make matches to prices and products, even if the scraped data is not an exact match. This, and other advanced analytics, enables the recipient of the scraped data to make multiple competitive positioning moves that steal away competitive advantage.

The ramifications of this type of advanced approach to retail site scraping are dire for many online retailers. The fact is, online retailers must stop bots from scraping their data in the first place, before the real harm occurs downstream. Otherwise, retailers face a losing battle once competitors apply advanced analytics against the data. This final step can take apart an online retailer’s business — product by product.

Try Imperva for Free

Protect your business for 30 days on Imperva.