Home > Blog

Imperva Blog

Read next

Trending

XSS Marks the Spot: Digging Up Vulnerabilities in ChatGPT

Feb 19, 2024 6 min read

CVE-2023-50164: A Critical Vulnerability in Apache Struts

Dec 19, 2023 2 min read

CVE-2023-22524: RCE Vulnerability in Atlassian Companion for macOS

Dec 14, 2023 5 min read

Imperva Detects Undocumented 8220 Gang Activities

Dec 14, 2023 3 min read

Analysis: A Ransomware Attack on a PostgreSQL Database

Oct 24, 2023 3 min read

See how we can help you secure your web apps and data.

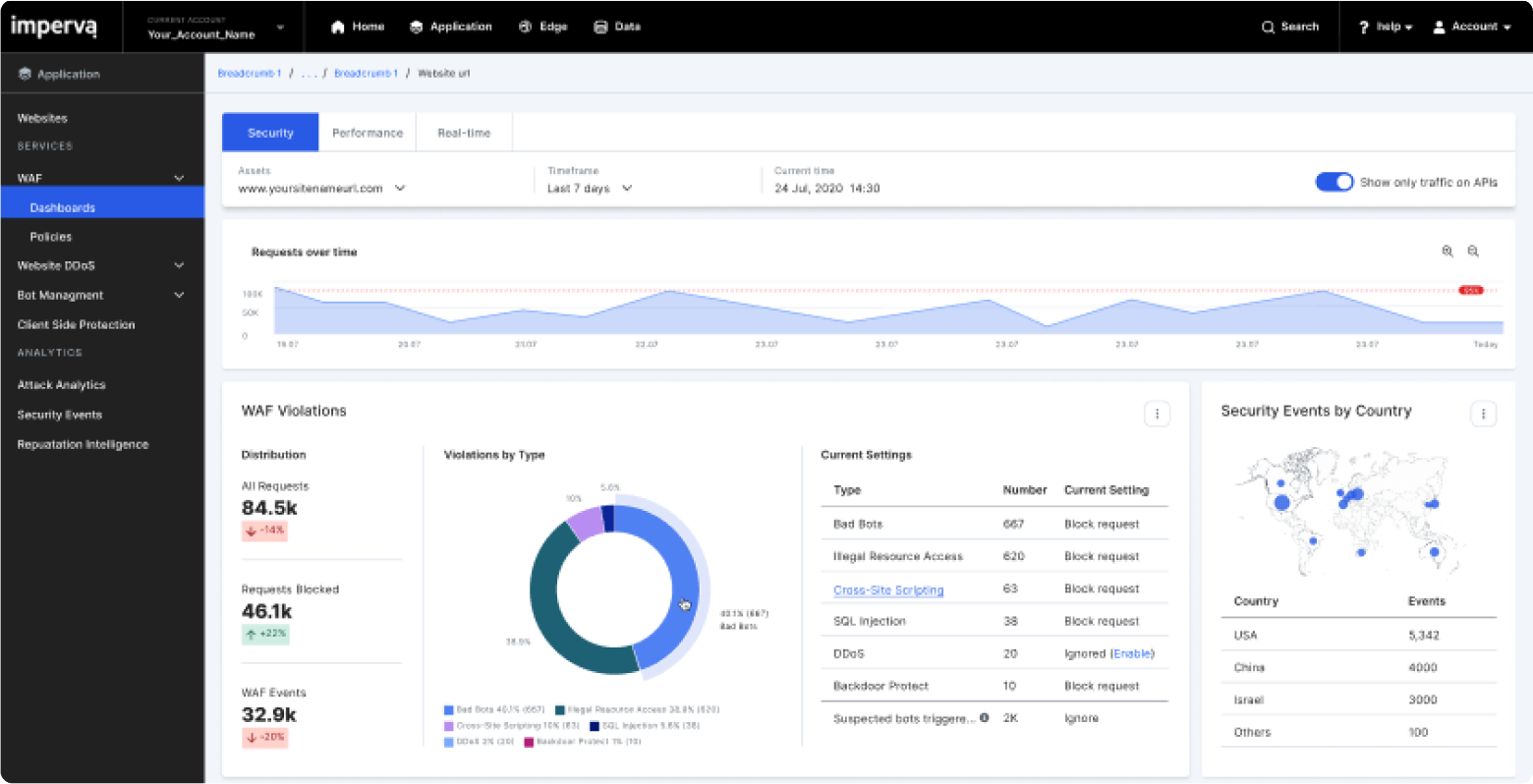

The State of API Security in 2024

Learn about the current API threat landscape and the key security insights for 2024

Download NowProtect Against Business Logic Abuse

Identify key capabilities to prevent attacks targeting your business logic

Download NowThe State of Security Within eCommerce in 2022

Learn how automated threats and API attacks on retailers are increasing

Free ReportPrevoty is now part of the Imperva Runtime Protection

Protection against zero-day attacks

No tuning, highly-accurate out-of-the-box

Effective against OWASP top 10 vulnerabilities

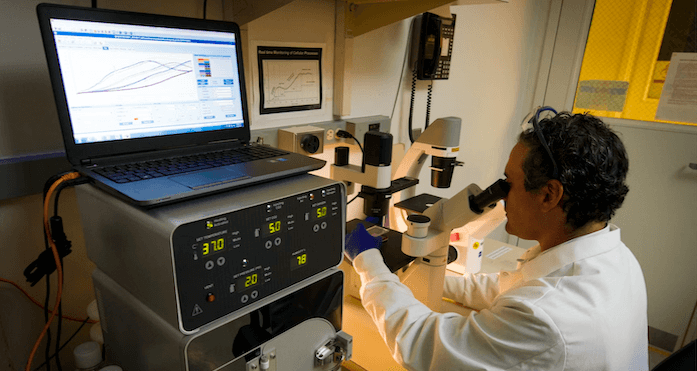

Want to see Imperva in action?

Fill out the form and our experts will be in touch shortly to book your personal demo.

Thank you!

An Imperva security specialist will contact you shortly.

Top 3 US Retailer

Subscribe to our blog

Thank you!

Keep an eye on that inbox for the latest news and industry updates.